| |

| James H. Bassett, “Okapi,” Bassett Associates Archive, accessed August 5, 2023, https://bassettassociates.org/archive/items/show/337. Available under a CC BY 4.0 license. |

Several years ago, I was given access to the digital files of Bassett Associates, a landscape architectural firm that operated for over 60 years in Lima, Ohio. This award-winning firm, which disbanded in 2017, was well known for its zoological design work and also did ground-breaking work in incorporating storm water retention as part of landscape site design. In addition to images of plans and site photographs, the files also included scans of sketches done by the firm's founder, James H. Bassett, which was artwork in its own right. I had been deliberating what the best way was to make these works publicly available and decided that this summer I would make it my project to set up an online digital archive featuring some of the images from the files.

Given my background as a Data Science and Data Curation Specialist at the Vanderbilt Libraries, it seemed like a good exercise to figure out how to set up Omeka Classic on Amazon Web Services (AWS), Vanderbilt's preferred cloud computing platform. Omeka is a free, open-source web platform that is popular in the library and digital humanities communities for creating online digital collections and exhibits, so it seemed like a good choice for me given that I would be funding this project on my own.

Preliminaries

The hard drive I have contains about 70 000 files collected over several decades. So the first task was to sort through the directories to figure out exactly what was there. For some of the later projects, there were some born-digital files, but the majority of the images were either digitizations of paper plans and sketches, or scans of 35mm slides. In some cases, the same original work was present several places on the drive with a variety of resolutions, so I needed to sort out where the highest quality files were located. Fortunately, some of the best works from signature projects had been digitized for an art exhibition, "James H. Bassett, Landscape Architect: A Retrospecive Exhibition 1952-2001" that took place in Artspace/Lima in 2001. Most of the digitized files were high-resolution TIFFs, which were ideal for preservation use. I focused on building the online image collection by featuring projects that were highlighted in that exhibition, since they covered the breadth of types of work done by the firm throughout its history.

The second major issue was to resolve the intellectual property status of the images. Some had previously been published in reports and brochures, and some had not. Some were from before the 1987 copyright law went into effect and some were after. Some could be attributed directly to James Bassett before the Bassett Associates corporation was formed and others could not be attributed to any particular individual. Fortunately, I was able to get permission from Mr. Bassett and the other two owners of the corporation when it disbanded to make the images freely available under a Creative Commons Attribution 4.0 International (CC BY 4.0) license. This basically eliminated complications around determining the copyright status of any particular work, and allows the images to be used by anyone as long as they provide the requested citation.

|

| TIFF pyramid for a sketch of the African plains exhibit at the Kansas City Zoo. James H. Bassett, “African Plains,” Bassett Associates Archive, accessed August 6, 2023, https://bassettassociates.org/archive/items/show/415. Available under a CC BY 4.0 license. |

Image pre-processing

For several years I have been investigating how to make use of the International Image Interoperability Framework (IIIF) to provide a richer image viewing experience. Based on previous work and experimentation with our Libraries' Cantaloupe IIIF server, I knew that large TIFF images needed to be converted to tiled pyramidal (multi-resolution) form to be effectively served. I also discovered that TIFFs using CMYK color mode did not display properly when served by Cantaloupe. So the first image processing step was to open TIFF or Photoshop format images in Photoshop, flatten any layers, convert to RGB color mode if necessary, reduce the image size to less than 35 MB (more on size limits later), and save the image in TIFF format. JPEG files were not modified -- I just used the highest resolution copy that I could find.

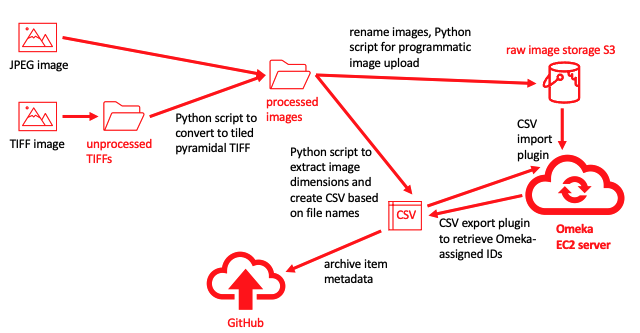

Because I wanted to make it easy in the future to use the images with IIIF, I used a Python script that I wrote to converting single-resolution TIFFs en mass to tiled pyramidal TIFFs via ImageMagick. These processed TIFFs or high-resolution JPEGs were the original files that I eventually uploaded to Omeka.

Why use AWS?

One of my primary reasons for using AWS as the hosting platform was the availability of S3 bucket storage. AWS S3 storage is very inexpensive and by storing the images there rather than within the file storage attached to the cloud server, the image storage capacity could basically expand indefinitely without requiring any changes to the configuration of the cloud server hosting the site. Fortunately, there is an Omeka plug-in that makes it easy to configure storage in S3.

Another advantage (not realized in this project) is that because image storage is outside the server in a public S3 bucket, the same image files can be used as source files for a Cantaloupe installation. Thus a single copy of an image in S3 can serve the purpose of provisioning Omeka, being the source file for IIIF image variants served by Cantaloupe, and having a citable, stable URL that allows the original raw image to be downloaded by anyone.

I've also determined through experimentation that one can run a relatively low-traffic Omeka site on AWS using a single t2.micro tier Elastic Compute Cloud (EC2) server. This minimally provisioned server currently costs only US$ 0.0116 per hour (about $8 per month) and is "free tier eligible", meaning that new users could run a Omeka on EC2 for free during the first year. Including the cost of the S3 storage, one could run an Omeka site on AWS with hundreds of images for under $10 per month.

The down side

The main problem with installing Omeka on AWS is that it is not a beginner-level project. I'm relatively well-acquainted with AWS and Unix command line, but it took me a couple months on and off to figure out how to get all of the necessary pieces to work together. Unfortunately, there wasn't a single web page that laid out all of the steps, so I had to read a number of blog posts and articles, then do a lot of experimenting to get the whole thing to work. I did take detailed notes, including all of the necessary commands and configuration details, so it should be possible for someone with moderate command-line skills and a willingness to learn the basics of AWS to replicate what I did.

Installation summary

Omeka installation on EC2

http://54.243.224.52/archive/Mapping an Elastic IP address to a custom domain and enabling secure HTTP

Optional additional steps

Efficient workflow

The workflow described here is based on assembling the metadata in the most automated way possible, using file naming conventions, a Python script, and programatically created CSV files. Python scripts are also used to upload the files to S3, and from there they can be automatically imported into Omeka.

After the items are imported, the CSV export plugin can be used to extract the ID numbers assigned to the items by Omeka. A Python script then extracts the IDs from the resulting CSV and inserts them into the original CSVs used to assemble the metadata.

Notes about TIFF image files

Note about file sizes

The other thing to consider is that when TIFFs are converted to tiled pyramidal form, there is an increase in size of roughly 25% when the low-res layers are added to the original high-res layer. So a 40 MB raw TIFF may be at or over 50 MB after conversion. I have found that if I keep the original file size below 35 MB, the files usually load without problems. It is annoying to have to decrease the resolution of any souce files in order to add them to digital collection, but there is a workaround (described in the IIIF section below) for extremely large TIFF image files.

The CSV Import plugin

Omeka identifiers

Backing up the data

The most straightforward is to create an Amazon Machine Image (AMI) of the EC2 server. Not only will this save all of your data, but it will also archive the complete configuration of the server at the time the image is made. This is critical if you have any disasters while making major configuration changes and need to roll back the EC2 to an earlier (functional) state. It is quite easy to roll back to an AMI and re-assign the Elastic IP to the new EC2 instance. However, this rollback will have no impact on any files saved in S3 by Omeka after the time when the backup AMI was created. Those files won't hurt anything, but they will effectively be uselessly orphaned there.

The CSV files pushed to GitHub after each CSV import (example) can also be used as a sort of backup. Any set of rows from the saved metadata CSV file can be used to re-upload those items onto any Omeka instance as long as the original files are still in the raw source image S3 bucket. Of course, if you make manual edits to the metadata, the metadata in the CSV file would become stale.

Using IIIF tools in Omeka

The UniversalViewer plugin allows Omeka to serve images like a IIIF image server and it generates IIIF manifests using the existing metadata. That makes it possible for the Universal Viewer player (included in the plugin) to display images in a rich manner that allows pan and zoom. This plugin was very appealing to me because if it functioned well, it would enable IIIF capabilities without needing to manage any other servers. I was able to install it and the embedded Universal Viewer did launch, but the images never loaded in the viewer. Despite spending a lot of time messing around with the settings, disabling S3 storage, and launching a larger EC2 image, I was never able to get it to work, even for a tiny JPEG file. I read a number of Omeka forum posts about troubleshooting, but eventually gave up.

If I had gotten it to work, there was one potential problem with the setup anyway. The t2.micro instance that I'm running has very low resource capacity (memory, number of CPUs, drive storage), which is OK as I've configured it because the server just has to run a relatively tiny MySQL database and serve static files from S3. But presumably this plugin would also have to generate the image variants that it's serving on the fly and that could max out the server quite easily. I'm disappointed that I couldn't get it to work, but I'm not confident that it's the right tool for a budget installation like this one.

I had more success with the IIIF Toolkit plugin. It also provides an embedded Universal Viewer that can be inserted various places in Omeka. The major downside is that you must have access to a separate IIIF server to actually provide the images used in the viewer. I was able to test it out by loading images into the Vanderbilt Libraries' Cantaloupe IIIF server and it worked pretty well. However, setting up your own Cantaloupe server on AWS does not appear to be a trivial task and because of the resources required for the IIIF server to run effectively, it would probably cost a lot more per month to operate than the Omeka site itself. (Vanderbilt's server is running on a cluster with a load balancer, 2 vCPU, and 4 GB memory. All of these increases over a basic single t2.micro instance would involve a significantly increased cost.) So in the absence of an available external IIIF server, this plugin probably would not be useful for an independent user with a small budget.

One nice feature that I was not able to try was pointing the external server to the `original` folder of the S3 storage bucket. That would be a really nice feature since it would not require loading the images separately into dedicated storage for the IIIF server separate from what is already being provisioned for Omeka. Unfortunately, we have not yet got that working on the Libraries' Cantaloupe server as it seems to require some custom Ruby coding to implement.

Manifest is the most straightforward because it only requires a manifest URL (commonly available from many sources). But the import was messy and always created a new collection for each item imported. In theory, this could be avoided by selecting an existing collection using the `Parent` dropdown, but that feature never worked for me.

I concluded that importing canvases was the only feasible method. Unfortunately, canvas JSON usually doesn't exist in isolation -- it usually is part of the JSON for an entire manifest. The `From Paste` option is useful if you are capable of the tedious task of searching through the JSON of a whole manifest and copying just the JSON for a single canvas from it. I found it much more useful to just create Python script to generate minimal canvas JSON for an image and save it as a file, which could either be uploaded directly, or pushed to the web and read in through a URL. It gets the pixel dimensions from the image file, with labels and descriptions taken from a CSV file (the IIIF import does not use more information than that). These values are inserted into a JSON canvas template, then saved as a file. The script will loop through an entire directory of files, so it's relatively easy to make canvases for a number of images that were already uploaded using the CSV import function (just copy and paste labels and descriptions from the metadata CSV file). Once the canvases have been generated, either upload them or paste their URLs (if they were pushed to the web) on the IIIF Toolkit Import Items page.

|

| Entire master plan image. Bassett Associates. “Binder Park Zoo Master Plan (IIIF),” Bassett Associates Archive, accessed August 6, 2023, https://bassettassociates.org/archive/items/show/418. Available under a CC BY 4.0 license. |

_-_Vanderbilt_Fine_Arts_Gallery_-_1992.083.tif/lossy-page1-782px-thumbnail.tif.jpg)